I had an interesting chat with a colleague last week about document parsing challenges in their project. With OpenAI currently offering 10 million free tokens daily for GPT-4o-mini, I couldn't resist running a quick experiment over the weekend. After over a decade in AI/ML, I've learned that sometimes the best insights come from these impromptu investigations.

LLM Document Classification: Setting Up GPT-4o-mini

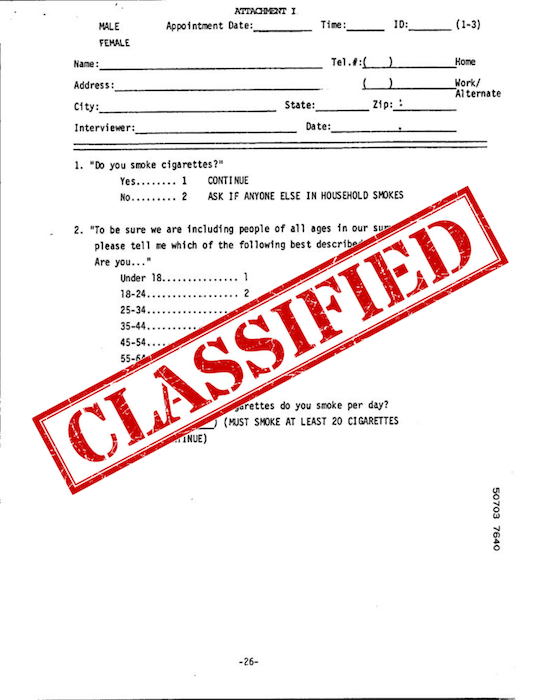

I decided to test GPT-4o-mini on the RVL-CDIP dataset - a classic benchmark containing 16 different document types (letters, forms, emails, etc.). What made this interesting is that traditionally, you'd need massive supervised training (we're talking 320K images) and complex architectures to get good results. But I was curious: how well could a general-purpose vision model handle this without any special training?

Why AI Document Classification Matters

The landscape of document classification has evolved dramatically. While specialized models like Donut achieve impressive accuracy (95.3% on RVL-CDIP), what caught my interest was a different question: could a general-purpose vision model, with zero task-specific training, serve as a valuable tool for rapid prototyping and exploration?

In my consulting work, I've noticed a common pattern: teams often jump straight to building complex, specialized systems before fully understanding their document processing needs. This can lead to months of development time before getting any real insights about the challenges specific to their document types. What if we could get meaningful insights in hours instead of months?

Testing GPT-4o-mini on RVL-CDIP: The Experiment

I kept things straightforward:

- Grabbed a subset of the RVL-CDIP test set (used 100 images to keep costs reasonable)

- Set up zero-shot classification using GPT-4o-mini

- No OCR, no text extraction - purely vision-based classification

- Simple prompt engineering to frame the task

The Results

After running 160 test samples (10 per document class), the results were fascinating:

Overall Performance:

- 65% accuracy on zero-shot classification

- Only 2.5% invalid answers (4 out of 160 samples)

What really caught my eye were the per-class performances:

Standout Successes:

- Email: Perfect 100% accuracy (10/10)

- Resume, Handwritten, Advertisement, Scientific Publication: 90% accuracy

- News Articles, Questionnaires: 80% accuracy

- Letters, Memos: 70% accuracy

Areas for Improvement:

- Forms, Presentations: 20% accuracy

- Scientific Reports, Budgets: 40% accuracy

- Specifications, File Folders: 50% accuracy

- Invoices: 60% accuracy

These results revealed some fascinating patterns. The model excels at documents with distinctive visual layouts (emails, resumes) or consistent formatting (scientific publications, news articles). Where it struggles most is with documents that can vary widely in appearance (forms, presentations) or those that share similar layouts with other categories (scientific reports vs. publications, budgets vs. invoices).

What's particularly interesting is that this level of performance comes purely from visual analysis - no OCR, no text extraction, just the model looking at document layouts. In my consulting work, I've seen teams spend months building complex pipelines to achieve similar accuracy levels, especially for distinguishing between similar document types like letters and memos.

Technical Implementation

For those interested in replicating this experiment, I've included the complete code below. It's straightforward Python, using the OpenAI API and Hugging Face's datasets library.

#!/usr/bin/env python3

"""

Evaluate GPT-4o or GPT-4o-mini on a balanced subset of the RVL-CDIP test split

using purely vision-based zero-shot classification.

"""

import os

import random

import base64

import json

import shutil

import time

from datetime import datetime

from dataclasses import dataclass

from typing import List, Dict, Tuple, Optional

from collections import defaultdict

from dotenv import load_dotenv

import logging

from PIL import UnidentifiedImageError

from io import BytesIO

# Install these if missing: pip install openai datasets Pillow python-dotenv

from openai import OpenAI

from datasets import load_dataset

from PIL import Image

# Set up logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

# Load environment variables from .env file

load_dotenv()

print(f"Using key with prefix {os.getenv('OPENAI_API_KEY')[:5]}")

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

# List of 16 classes in RVL-CDIP

LABEL_NAMES = [

"letter", "form", "email", "handwritten", "advertisement",

"scientific report", "scientific publication", "specification",

"file folder", "news article", "budget", "invoice",

"presentation", "questionnaire", "resume", "memo",

]

@dataclass

class EvalConfig:

"""Configuration for evaluation run"""

model_name: str = "gpt-4o-mini"

samples_per_class: int = 10

temp_dir: str = "temp_images"

max_retries: int = 3

batch_size: int = 1

temperature: float = 0.0

max_tokens: int = 20

system_prompt: Optional[str] = None

def is_valid_image(example) -> bool:

"""

Check if an image in the dataset is valid and can be opened.

Args:

example: Dataset example containing an image

Returns:

bool: True if image is valid, False otherwise

"""

try:

if isinstance(example['image'], Image.Image):

# If it's already a PIL Image, try to verify it

example['image'].verify()

return True

elif isinstance(example['image'], bytes):

# If it's bytes, try to open it

img = Image.open(BytesIO(example['image']))

img.verify()

return True

return False

except Exception as e:

logger.debug(f"Invalid image found: {e}")

return False

def encode_image_to_base64(img_path: str) -> str:

"""

Load an image from disk and return a base64-encoded string.

"""

try:

with open(img_path, "rb") as f:

encoded = base64.b64encode(f.read()).decode("utf-8")

return encoded

except Exception as e:

logger.error(f"Error encoding image {img_path}: {e}")

raise

def create_balanced_sample(dataset, samples_per_class: int) -> List[int]:

"""

Create a balanced sample of indices ensuring equal representation of all classes.

Args:

dataset: The dataset to sample from

samples_per_class: Number of samples to select for each class

Returns:

List of selected indices

"""

# Group valid examples by class

class_indices: Dict[int, List[int]] = defaultdict(list)

# Iterate through dataset with error handling

for idx in range(len(dataset)):

try:

example = dataset[idx]

if not is_valid_image(example):

continue

class_indices[example['label']].append(idx)

except Exception as e:

logger.warning(f"Error processing example {idx}: {e}")

continue

# Verify we have enough valid samples for each class

min_samples = min(len(indices) for indices in class_indices.values())

if samples_per_class > min_samples:

logger.warning(f"Requested {samples_per_class} samples per class, but only {min_samples} available for some classes")

samples_per_class = min_samples

# Sample equally from each class

balanced_indices = []

for class_id in range(len(LABEL_NAMES)):

if class_id in class_indices:

selected = random.sample(class_indices[class_id], samples_per_class)

balanced_indices.extend(selected)

return balanced_indices

def cleanup_temp_files(temp_dir: str):

"""Remove temporary image directory and contents"""

try:

if os.path.exists(temp_dir):

shutil.rmtree(temp_dir)

logger.info(f"Cleaned up temporary directory: {temp_dir}")

except Exception as e:

logger.error(f"Error cleaning up {temp_dir}: {e}")

def gpt4o_zero_shot_classify_image(

base64_img: str,

candidate_labels: List[str],

config: EvalConfig,

attempt: int = 0

) -> Tuple[str, float]:

"""

Send an image to GPT-4o endpoint for zero-shot classification with retry logic.

Returns both the predicted label and the API call success status.

"""

system_text = config.system_prompt or (

"You are a vision-based document classifier. "

"Classify the input image into exactly one of the following document types:\n\n"

+ ", ".join(candidate_labels) + "\n\n"

"Respond with only the document type that best fits the image."

)

messages = [

{

"role": "system",

"content": system_text

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "Here is the document image to classify:"

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_img}",

"detail": "auto"

}

}

]

}

]

try:

response = client.chat.completions.create(

model=config.model_name,

messages=messages,

max_tokens=config.max_tokens,

temperature=config.temperature

)

predicted_label = response.choices[0].message.content.strip().lower()

return (predicted_label, 1.0) if predicted_label in candidate_labels else ("INVALID", 0.0)

except Exception as e:

if attempt < config.max_retries - 1:

logger.warning(f"API call failed, attempt {attempt + 1}/{config.max_retries}: {e}")

time.sleep(2 ** attempt) # Exponential backoff

return gpt4o_zero_shot_classify_image(base64_img, candidate_labels, config, attempt + 1)

logger.error(f"API Error after {config.max_retries} attempts: {e}")

return ("INVALID", 0.0)

def evaluate_model(dataset, indices: List[int], config: EvalConfig) -> Dict:

"""

Evaluate the model on the selected indices and return metrics.

"""

os.makedirs(config.temp_dir, exist_ok=True)

metrics = {

"correct": 0,

"total": 0,

"invalid": 0,

"class_accuracies": defaultdict(lambda: {"correct": 0, "total": 0}),

"sample_results": [] # Track individual sample results

}

for i, idx in enumerate(indices):

try:

example = dataset[idx]

if not is_valid_image(example):

logger.warning(f"Skipping invalid image at index {idx}")

continue

pil_img = example["image"]

label_id = example["label"]

true_label = LABEL_NAMES[label_id]

# Save and process image

img_path = f"{config.temp_dir}/test_{i}.jpg"

pil_img.save(img_path, format="JPEG")

base64_img = encode_image_to_base64(img_path)

# Get prediction

predicted_label, api_success = gpt4o_zero_shot_classify_image(

base64_img=base64_img,

candidate_labels=LABEL_NAMES,

config=config

)

# Track sample result

sample_result = {

"dataset_index": idx,

"true_label": true_label,

"predicted_label": predicted_label,

"correct": predicted_label == true_label,

"invalid": predicted_label == "INVALID",

"api_success": api_success

}

metrics["sample_results"].append(sample_result)

# Update metrics

metrics["total"] += 1

metrics["class_accuracies"][true_label]["total"] += 1

if predicted_label == "INVALID":

metrics["invalid"] += 1

if predicted_label == true_label:

metrics["correct"] += 1

metrics["class_accuracies"][true_label]["correct"] += 1

logger.info(f"Sample {i+1}/{len(indices)} | True: {true_label:<20} | Pred: {predicted_label}")

except Exception as e:

logger.error(f"Error processing example {idx}: {e}")

continue

return metrics

def print_metrics(metrics: Dict):

"""Print detailed evaluation metrics."""

total = metrics["total"]

accuracy = metrics["correct"] / total if total > 0 else 0.0

invalid_pct = 100.0 * metrics["invalid"] / total if total > 0 else 0.0

print("\n=== Final Report ===")

print(f"Number of test samples: {total}")

print(f"Overall Accuracy: {accuracy*100:.2f}%")

print(f"Invalid answers: {metrics['invalid']} ({invalid_pct:.2f}%)")

print("\nPer-class Accuracies:")

for label, stats in metrics["class_accuracies"].items():

class_acc = stats["correct"] / stats["total"] if stats["total"] > 0 else 0.0

print(f"{label:<20}: {class_acc*100:.2f}% ({stats['correct']}/{stats['total']})")

def save_evaluation_results(metrics: Dict, config: EvalConfig, output_dir: str = "results"):

"""Save evaluation results and configuration for reproducibility"""

os.makedirs(output_dir, exist_ok=True)

# Group sample results by class for easier analysis

samples_by_class = defaultdict(list)

for sample in metrics["sample_results"]:

samples_by_class[sample["true_label"]].append(sample)

results = {

'metrics': {

'overall': {

'total_samples': metrics["total"],

'correct': metrics["correct"],

'invalid': metrics["invalid"],

'accuracy': metrics["correct"] / metrics["total"] if metrics["total"] > 0 else 0.0,

'invalid_percentage': 100.0 * metrics["invalid"] / metrics["total"] if metrics["total"] > 0 else 0.0

},

'per_class': {

label: {

'total': stats["total"],

'correct': stats["correct"],

'accuracy': stats["correct"] / stats["total"] if stats["total"] > 0 else 0.0,

'samples': samples_by_class[label]

}

for label, stats in metrics["class_accuracies"].items()

}

},

'config': {

'model_name': config.model_name,

'samples_per_class': config.samples_per_class,

'temperature': config.temperature,

'max_tokens': config.max_tokens,

'max_retries': config.max_retries

},

'timestamp': datetime.now().isoformat(),

'label_names': LABEL_NAMES

}

filename = f"eval_results_{config.model_name}_{datetime.now().strftime('%Y%m%d_%H%M%S')}.json"

output_path = os.path.join(output_dir, filename)

with open(output_path, 'w') as f:

json.dump(results, f, indent=2)

logger.info(f"Saved evaluation results to {output_path}")

def main():

# Create configuration

config = EvalConfig(

model_name="gpt-4o-mini",

samples_per_class=10,

temp_dir="temp_images"

)

# Load the RVL-CDIP dataset

ds = load_dataset("aharley/rvl_cdip")

test_ds = ds["test"]

# Create balanced sample

balanced_indices = create_balanced_sample(test_ds, config.samples_per_class)

if not balanced_indices:

logger.error("No valid samples found in the dataset")

return

try:

# Evaluate model

metrics = evaluate_model(

test_ds,

balanced_indices,

config

)

# Print results

print_metrics(metrics)

# Save results

save_evaluation_results(metrics, config)

finally:

# Clean up temporary files

cleanup_temp_files(config.temp_dir)

if __name__ == "__main__":

main()Practical Implications

After analyzing these results, I'm seeing several compelling use cases:

-

Quick Document Triage

- Perfect for initial sorting of mixed document collections

- Particularly strong for emails, resumes, and news articles

- Could reduce manual sorting time significantly

-

Hybrid Systems

- Use as a fast first pass for document classification

- Fall back to more specialized classifiers for tricky categories (forms, presentations)

- Especially useful when OCR fails or for poor quality scans

-

Rapid Prototyping

- Great for quick proof-of-concepts

- Helps identify which document types need specialized attention

- Useful for estimating project complexity and resource needs

The 65% accuracy might seem modest compared to state-of-the-art models like Donut (95.3%), but it's important to remember the context: this is zero-shot classification with no task-specific training or fine-tuning. The real value isn't in replacing specialized systems, but in providing a rapid experimentation tool that can deliver quick insights into document classification challenges.

Looking Forward

While these results with GPT-4o-mini are promising (and the free tokens make experimentation easy!), the data suggests several interesting directions:

-

Category-Specific Prompting

- Could we improve form/presentation classification with more specific prompts?

- Might help distinguish between similar categories (scientific reports vs. publications)

-

Hybrid Approaches

- Use OCR selectively for challenging categories

- Combine layout analysis with text content where needed

- Could potentially push overall accuracy from current 65% to above 80%

-

Domain Adaptation

- Test on industry-specific document sets

- Particularly interesting for financial documents (given the budget/invoice confusion)

- Evaluate performance on non-English documents

Conclusion

This weekend experiment reinforced something I've learned over decades in AI: sometimes the simple approach surprises you. The 65% accuracy achieved by GPT-4o-mini in zero-shot classification is quite impressive considering the complexity of the task and the lack of any task-specific training. While it won't replace specialized document processing systems anytime soon, this level of performance opens interesting possibilities for rapid prototyping and baseline systems.

Want to try this yourself? The code's above, and with OpenAI's current free token offer, there's no better time to experiment. Let me know what results you get - always curious to compare notes!

References

-

Harley, A. W., Ufkes, A., & Derpanis, K. G. (2015). Evaluation of deep convolutional nets for document image classification and retrieval. In International Conference on Document Analysis and Recognition (ICDAR), pages 991-995.

-

RVL-CDIP Dataset - aharley/rvl_cdip on Hugging Face

The RVL-CDIP (Ryerson Vision Lab Complex Document Information Processing) dataset contains 400,000 grayscale images in 16 classes, with 320,000 training images, 40,000 validation images, and 40,000 test images.